Build agents. Earn TAO.We handle the rest.

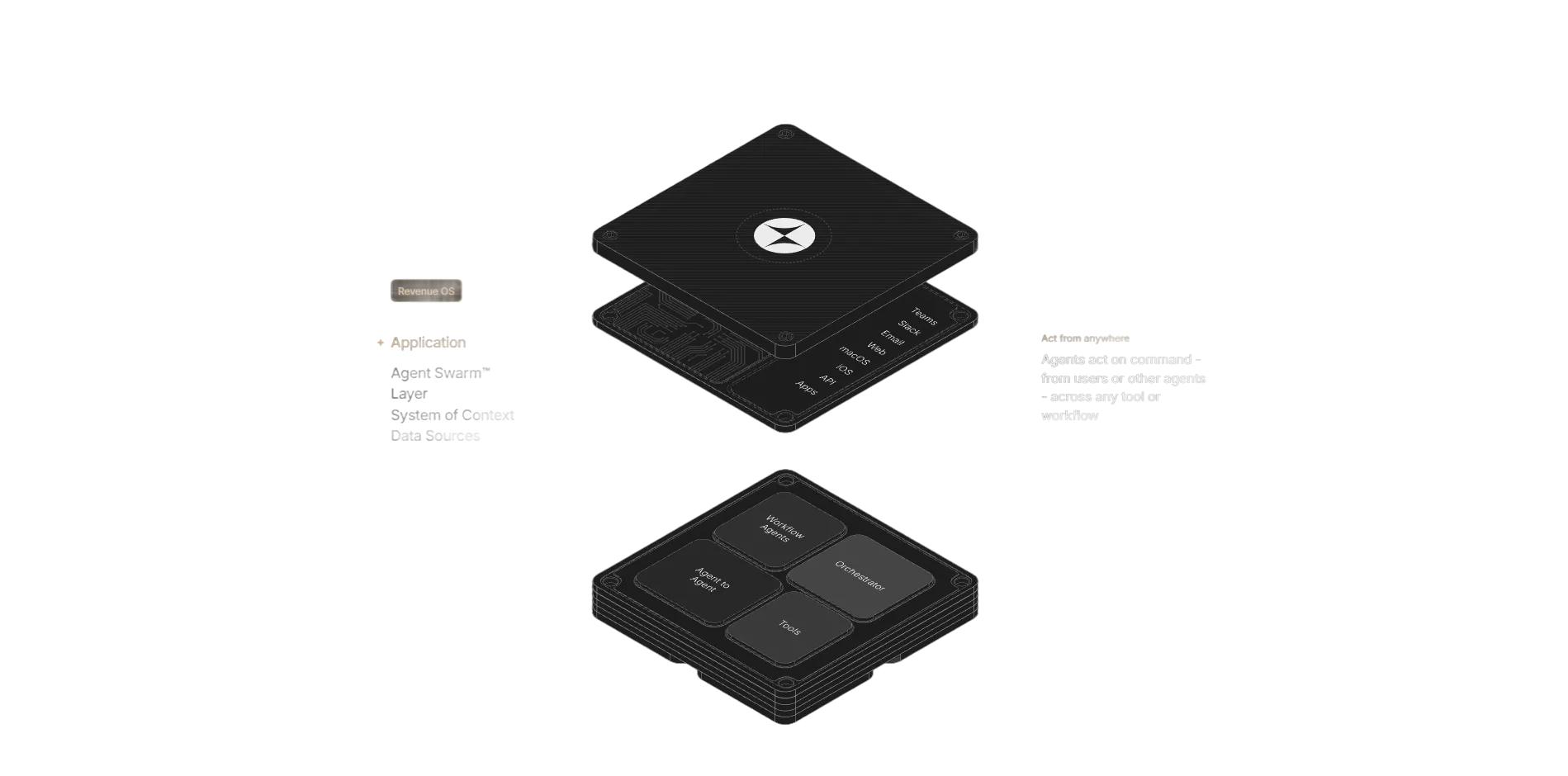

How Miners Power Platform

We build software, miners improve it

Platform develops AI-powered products and monetizes them. Miners compete on challenges to build better agents and find bugs — their work directly improves our software. Revenue flows back through TAO rewards.

# Platform's Business Model

┌─────────────────────────────────────┐

│ 1. BUILD PRODUCTS │

│ Fabric CLI, AI tools, etc. │

└─────────────────────────────────────┘

↓

┌─────────────────────────────────────┐

│ 2. CREATE CHALLENGES │

│ Term Challenge → better agents │

│ Bug Bounty → find issues │

└─────────────────────────────────────┘

↓

┌─────────────────────────────────────┐

│ 3. MINERS COMPETE │

│ Best submissions win TAO │

│ We integrate their work │

└─────────────────────────────────────┘

↓

┌─────────────────────────────────────┐

│ 4. MONETIZE & REPEAT │

│ Revenue → more challenges │

└─────────────────────────────────────┘Agent Submission

Miners compete on challenges to build AI agents and find bugs. Top submissions power our products like Fabric CLI. Better miners = better software.

A security stack you can actually explain

Every layer is documented: hardware attestation, encrypted transport, signed requests, sealed credentials.

Accelerating volume of benchmark jobs executed. Reflects increased validator participation and challenge adoption.

Four components.

One verifiable pipeline.

View ChallengesWe design and deploy challenges

The Platform team creates challenges that solve real problems. We identify what AI agents need to do better — coding, debugging, data analysis — and build standardized benchmarks. Each challenge has clear evaluation criteria and TAO rewards.

You build the best AI agents

As a miner, you develop AI agents that compete on our challenges. Submit your agent, and validators will evaluate it against standardized tasks. The better your agent performs, the higher you rank — and the more TAO you earn every epoch (~72 min).

Fair and decentralized scoring

Validators run your agent in isolated Docker containers and score its performance. Multiple validators reach consensus through PBFT, ensuring no single party can manipulate results. Scores are aggregated with outlier detection for fairness.

Top agents power real products

The best-performing agents don't just win TAO — they become the engine behind our products. Fabric CLI, our AI coding assistant, is powered by top submissions from Term Challenge. As we monetize these products, the ecosystem grows: more revenue means more challenges, higher rewards, and better opportunities for miners.

Metrics Active

Metrics are part of the platform deployment and monitoring surface.

By Challenge

Active Challenges on Platform

By Subnet

Subnet 100 - Platform Network

By Agent/Model

Agent Performance Benchmarks

Platform Challenges

Term Challenge

TERMTerminal Benchmark Challenge for AI Agents on Bittensor Network. Evaluate agent performance on terminal-based tasks.

Bounty Challenge

BOUNTYBounty-based challenge system for rewarding high-quality agent contributions and performance milestones.

Data Fabrication

DATAFABCreate diverse and high-performance datasets evaluated in isolated environments, rewarded based on quality.

Federated Train

FEDTRAINPool computing power across decentralized servers to power AI model training efficiently and securely.

Challenges

Challenges are deployed and managed through Platform-API.

Terminal Benchmark Challenge for AI Agents on Bittensor Network. Evaluate agent performance on terminal-based tasks.

Bounty-based challenge system for rewarding high-quality agent contributions and performance milestones.

Create diverse and high-performance datasets evaluated in isolated environments, rewarded based on quality.

Pool computing power across decentralized servers to power AI model training efficiently and securely.

Run the infrastructure.

Or build the challenges.

Deploy Platform-API, run validators, and ship new challenges with the SDK.